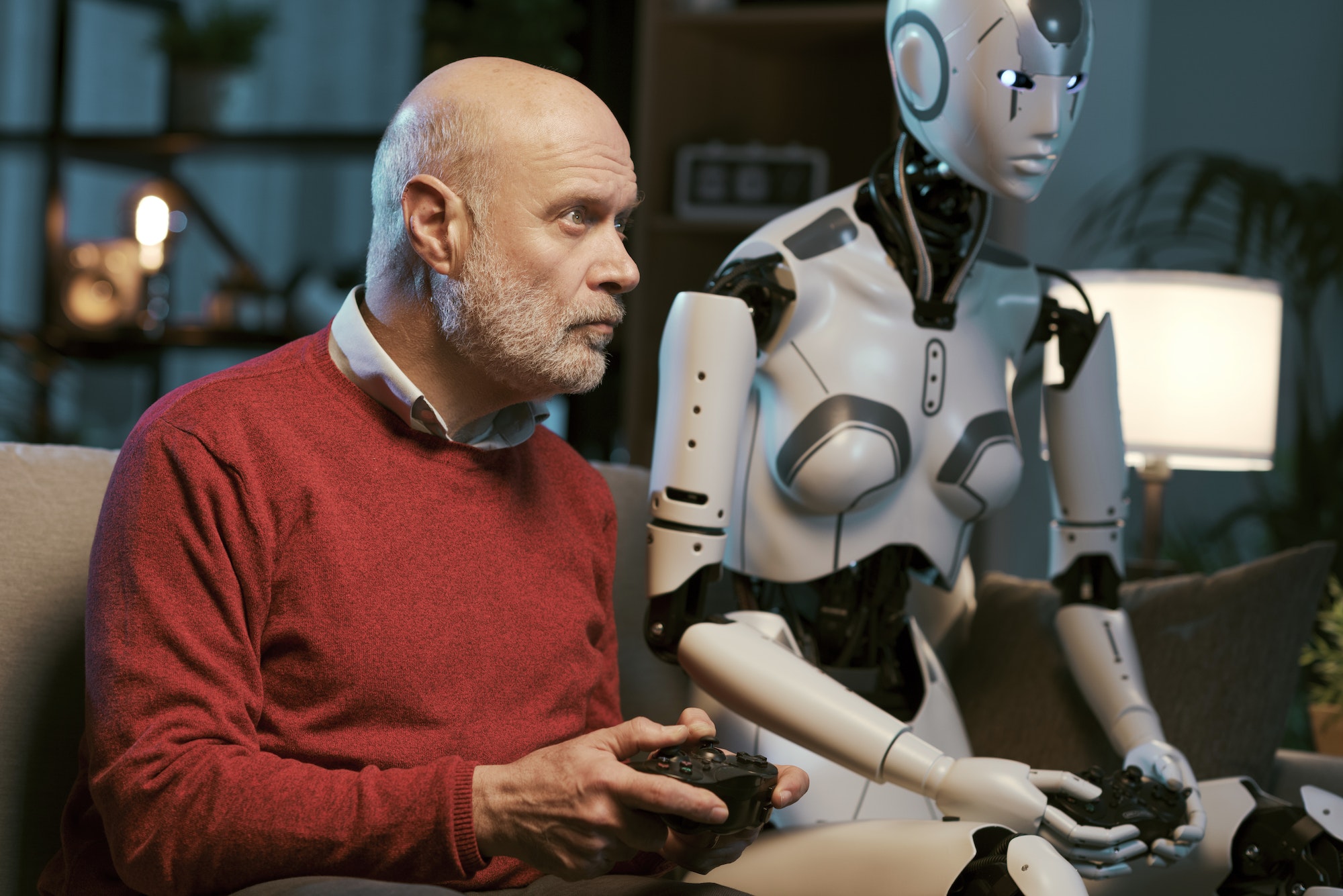

Technologies impacting the gaming industry

The gaming industry has changed from playing games on the phone to consoles and PC. New technologies are

Embracing the Power of Diversity, Integrity, Inspiring Innovation, and Ensuring Customer-Centricity to Drive Exceptional Results and Transform Industries

Dedicated to Exceeding Expectations by Upholding Uncompromising Quality, Delivering with Utmost Dedication, and Empowering Your Path to Success

Fueling Progress and Empowering Positive Change through Passionate Commitment, Collaborative Spirit, and Ethical Leadership for a Sustainable Future

Unleashing the Synergy of Human Potential and AI Innovation: Forging a Limitless Future of Endless Possibilities

To revolutionize industries through innovative problem-solving, leveraging our expertise in user experience, product development, automation, and cloud technology.

To be the trusted global technology partner, empowering businesses with cutting-edge solutions and delivering exceptional value to our clients worldwide.

Safeguarding online platforms with robust measures and proactive risk mitigation for user protection and trust.

Thorough evaluation and moderation of digital content to maintain quality and ensure compliance with guidelines.

Adapting and tailoring content to resonate with diverse cultures and languages, enabling global engagement and impact.

Crafting cutting-edge software solutions tailored to your unique business requirements, driving innovation and digital transformation.

Implementing rigorous testing processes to ensure software quality, reliability, and an exceptional user experience.

Efficiency and productivity amplified through intelligent automation technologies, streamlining workflows and accelerating business success.

Efficitur per dolor suspendisse elit ex. Dignissim integer amet bibendum faucibus porta quis netus pharetra.

Efficitur per dolor suspendisse elit ex. Dignissim integer amet bibendum faucibus porta quis netus pharetra.

Efficitur per dolor suspendisse elit ex. Dignissim integer amet bibendum faucibus porta quis netus pharetra.

Efficitur per dolor suspendisse elit ex. Dignissim integer amet bibendum faucibus porta quis netus pharetra.

Efficitur per dolor suspendisse elit ex. Dignissim integer amet bibendum faucibus porta quis netus pharetra.

Efficitur per dolor suspendisse elit ex. Dignissim integer amet bibendum faucibus porta quis netus pharetra.

With years of experience, we specialize in providing customized solutions that precisely align with your unique business needs, ensuring maximum efficiency and success.

We prioritize our clients' satisfaction above all else, consistently delivering top-notch service, proactive communication, and reliable support to build long-term partnerships based on trust and mutual success.

Porttitor integer bibendum odio pulvinar rutrum magnis viverra orci tincidunt efficitur. Aptent pharetra est nunc mattis donec per mi porttitor.

Rutrum lorem eros aliquam est egestas mi elit metus lacinia. Rhoncus sodales rutrum consectetur dictum litora urna ornare.

The gaming industry has changed from playing games on the phone to consoles and PC. New technologies are

The gaming industry has always been dominated by men, with few female players engaging in competitive play or

Introduction to blockchain Gamers may use blockchain to generate unique in-game objects that can be exchanged or sold

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis pulvinar.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Etiam felis leo congue augue amet purus consectetuer. Aliquam nullam conubia pede rutrum enim fames.

Etiam felis leo congue augue amet purus consectetuer. Aliquam nullam conubia pede rutrum enim fames.

Bibendum odio natoque ullamcorper nullam pulvinar efficitur. Elit ipsum et natoque vitae feugiat at condimentum neque lacus.

Bibendum odio natoque ullamcorper nullam pulvinar efficitur. Elit ipsum et natoque vitae feugiat at condimentum neque lacus.

Bibendum odio natoque ullamcorper nullam pulvinar efficitur. Elit ipsum et natoque vitae feugiat at condimentum neque lacus.

Eros vitae hendrerit facilisis sodales ligula. Tellus sed pellentesque porttitor fames enim scelerisque. Velit eget malesuada si justo pulvinar id sed inceptos pretium facilisi vulputate.

Rutrum lorem eros aliquam est egestas mi elit metus lacinia. Rhoncus sodales rutrum consectetur dictum litora urna ornare.